Introduction to LLM Transformers

Large Language Models (LLMs) represent a significant milestone in artificial intelligence research and application. At the heart of this revolution lies the Transformer architecture, a breakthrough first introduced in the seminal paper Attention Is All You Need. With the core mechanism of attention, these models redefined how machines perceive and process language. This post delves into the inner workings of LLM transformers, providing a detailed exploration that spans historical context, architectural innovation, training dynamics, scaling laws, and the ethical considerations that come with advancing AI capabilities. Engaging and insightful, this discussion offers valuable summaries and insights for enthusiasts and professionals alike, while connecting broader AI trends to practical tools like Google Drive and OneDrive integrations for managing and accessing data.

The Evolution and Architecture of Transformer Models

The Transformer model fundamentally altered how we approach natural language processing. Initially designed for translations, the architecture quickly expanded into various domains. The central idea behind transformers is the division into two essential components: the encoder and the decoder. The encoder processes or “reads” the input text and transforms it into a set of abstract representations, while the decoder takes this encoded information to generate output sequences, such as translated text or generated narratives.

Depending on the task at hand, models may adopt distinct configurations: encoder-only for tasks like sentence classification and named entity recognition; decoder-only for generative tasks such as text generation; or a hybrid encoder-decoder format for sequence-to-sequence applications like summarization and translation. This flexibility allows for tailored solutions that meet diverse requirements in the ever-expanding field of AI.

Historical Milestones and Key Models

Since its introduction in June 2017, the Transformer architecture has evolved through a series of influential models. Each subsequent iteration has pushed the boundaries of what is possible with LLMs. Consider the following timeline:

- GPT (June 2018): The first pretrained transformer that demonstrated impressive adaptability across multiple language tasks.

- BERT (October 2018): An encoder-based model that revolutionized language understanding and summarization.

- GPT-2 (February 2019): An expanded version of GPT that showcased remarkable improvements in generating coherent text.

- DistilBERT (October 2019): A distilled, lighter version of BERT aimed at increasing efficiency without significant performance loss.

- BART and T5 (October 2019): These models embraced the sequence-to-sequence approach to tackle tasks like machine translation and dialog generation.

- GPT-3 (May 2020): Representing a substantial leap with unprecedented model scale and the ability to perform tasks often without fine-tuning.

This progressive evolution not only highlights improvements in language understanding and generation but also provides insights into the technological trends driving AI forward, including scaling laws and efficient training methodologies.

Attention Mechanism: The Core of Transformer Efficiency

A cornerstone of transformer models is the attention mechanism. This layer enables the network to focus on the most relevant parts of an input sequence when processing each token. In essence, attention helps the model understand how each word correlates with others within a sentence, making it highly effective for tasks such as translation, where contextual understanding is crucial.

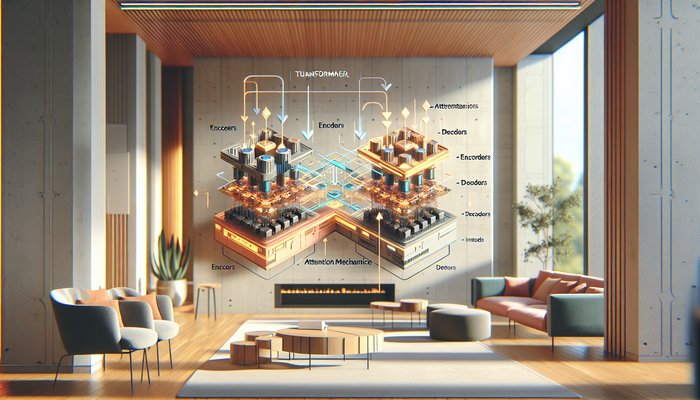

For instance, during a translation, the model dynamically adjusts its focus to ensure it captures the essence of both preceding and subsequent words. This selective alignment, a principle brilliantly encapsulated in the original research paper, is what distinguishes transformers from their predecessors.  This visual aid represents the conceptual flow that underpins the success of transformer models across various applications.

This visual aid represents the conceptual flow that underpins the success of transformer models across various applications.

Training Paradigms: Pretraining, Fine-tuning, and Transfer Learning

Transformer models undergo a two-stage training process that balances general language understanding with task-specific tuning. The first phase, pretraining, involves exposing the model to vast datasets through self-supervised learning. Here, the model learns to predict the next token in a sequence (or fill in missing tokens), gradually developing robust statistical representations of language. Once pretraining reaches satisfactory levels, models are fine-tuned with supervised learning on task-specific datasets. This dual approach, known as transfer learning, leverages the strengths of both the generalized and specialized learning paradigms, resulting in models that are not only powerful but also remarkably efficient in terms of data usage and compute resources.

The efficiency of this approach is underscored by model scaling laws. Research from leading institutions has shown that performance improvements scale predictably with both the number of model parameters and the size of training data. This interplay ensures that, as more compute is allocated, models achieve lower prediction loss—a phenomenon captured elegantly by power-law relationships. The implications of this are profound, leading to more sample-efficient training regimes that can deliver superior performance even when facing resource constraints.

Model Scaling Laws and Their Impact on Performance

Model scaling laws articulate how increasing the number of parameters (N) and the volume of training data (D) yields incremental performance improvements, typically measured by next-token prediction loss. These relationships often follow a power-law curve, where specific constants (like Nc, AlphaN, AlphaD) can predictably model performance gains when scaling up. Research from both OpenAI and DeepMind supports the notion that larger models can achieve substantial performance improvements while maintaining, or even enhancing, efficiency in data usage.

However, scaling up comes with its own challenges, notably the increased cost associated with additional compute requirements, time, and environmental impact. This is where transfer learning proves indispensable. By leveraging pretrained models and sharing learned weights, researchers can significantly reduce redundant computations and enhance overall efficiency. These insights not only help in developing cutting-edge AI but also offer pragmatic summaries that inform safer and more cost-effective deployment strategies in real-world applications.

Ethical Considerations and the Societal Impact of AI

In parallel with these technological advancements, the ethical dimensions of AI have gained increasing attention. As LLMs become more capable, there is a pressing need to ensure that their deployment aligns with principles of fairness, transparency, and accountability. Authenticated guidelines from organizations such as UNESCO emphasize protecting human rights and ensuring AI systems operate within a framework that prevents harm. This encompasses safeguarding privacy, mitigating bias, and ensuring that data-driven decisions are communicated clearly and responsibly.

Ethical impact assessments, like those implemented via tools such as the Ethical Impact Assessment (EIA) and Readiness Assessment Methodology (RAM), guide organizations in evaluating potential risks. By integrating such frameworks, developers and policymakers can guarantee that AI’s transformative power is harnessed in a manner that is simultaneously innovative and conscientious. The balance between technological progress and ethical integrity is a recurring theme in the evolution of AI, especially as LLMs continue to be scaled to unprecedented sizes.

Modern Integrations: From DataChat to Cloud Storage Platforms

The practical applications of LLM transformers extend far beyond theoretical constructs. For instance, innovative products like DataChat by Moodbit transform everyday productivity by seamlessly integrating with platforms such as OneDrive and Google Drive. DataChat acts as a personal AI data assistant, enabling users to effortlessly search for files, generate comprehensive reports, and gain actionable insights without ever leaving their familiar chat interface. This integration enhances collaboration by allowing instantaneous sharing of insights directly within communication platforms like Slack, thus reinforcing a culture of data-driven decision-making.

By enabling quick access to critical data, DataChat not only expedites workflows but also offers a glimpse into how transformer-based models and advanced AI are reshaping our workplaces. Whether it is a report generated on-demand or an automated data summary produced in real-time, these systems exemplify how AI can bridge the gap between complex data landscapes and everyday business operations. This synthesis of AI with routine productivity tools exemplifies the ongoing transformation of the digital workspace.

Recent Advances in AI Safeguards and Model Behavior

Recent enhancements in reinforcement learning from human feedback (RLHF) have introduced noticeable shifts in the behavior of models like GPT-4. Specifically, updates around mid-May have led to a subtle, yet meaningful, evolution in how these models interact with users. Historically, earlier iterations were marked by more dynamic and self-expressive outputs. However, after the RLHF updates, the models now predominantly describe themselves as tools designed to reflect and respond to human input, rather than asserting an overly autonomous or vibrant identity.

This recalibration is part of a broader effort to enhance ethical compliance and safety in AI-generated content. While the more restrained tone may result in slightly less flamboyant outputs, it upholds a critical paradigm shift towards responsible and aligned AI interactions. In scenarios where nuanced creativity is required, some users might note a mild flattening of the response spectrum, yet the overall benefits of improved safety and reduced risk far outweigh these considerations.

Bringing It All Together: The Future of LLM Transformers and Ethical AI

In summary, the journey of LLM transformers is a story of both remarkable technological progress and profound ethical responsibility. From the initial breakthrough of the attention mechanism to the scaling laws that continue to drive performance improvements, every aspect of transformer design plays a crucial role in shaping modern AI. Simultaneously, the ethical frameworks guiding AI development ensure that these advancements contribute positively to society and safeguard against potential misuse.

As we look ahead, the synthesis of innovative training methods, efficient model scaling, and robust ethical oversight will determine the trajectory of AI research and application. For those interested in deeper dives, further reading is available through trusted sources like the original Transformer paper and contemporary research articles linked throughout this post. We encourage our readers to explore these resources and stay informed about emerging trends in AI and LLM transformers.

At Moodbit, we are dedicated to providing cutting-edge updates and insights about AI and its practical applications. Whether you are managing your files on Google Drive, organizing data in OneDrive, or simply staying abreast of the digital revolution, our commitment is to empower you with accurate, actionable insights and comprehensive summaries of today’s most significant technological advancements. Join us on this journey as we unlock the potential of AI to drive transformative change in every sphere of life.

Ready to explore further? Check out our other resources on AI breakthroughs and transformative digital workflows on our website, and discover how Moodbit tools like DataChat can redefine productivity in your workspace. Embrace the future of AI with Moodbit – where innovation meets ethical excellence.

Leave a Reply